Revolutionizing Business Intelligence: LLM-Powered Competitor Analysis from Scraped SERPs/Websites

In the rapidly evolving digital landscape of 2025, businesses are drowning in competitor data while starving for actionable insights. LLM-powered competitor analysis from scraped SERPs/websites represents a paradigm shift from manual research to intelligent automation, transforming how organizations understand their competitive landscape. This revolutionary approach combines the precision of web scraping with the analytical power of large language models, creating a comprehensive intelligence system that delivers strategic advantages at unprecedented speed and scale.

Key Takeaways

• Automated Intelligence: LLM-powered competitor analysis from scraped SERPs/websites eliminates manual research bottlenecks, processing 10-20 competitor profiles per batch for optimal cost efficiency and accuracy

• Advanced Model Selection: Frontier LLMs including GPT-5, Gemini 2.5 Pro, and DeepSeek R1 offer specialized capabilities for different analysis scenarios, with open-source alternatives providing deployment flexibility

• Structured Output Quality: Modern implementations include confidence scoring (1-5 scale), source citations, and JSON-formatted insights that enable validated decision-making and strategic planning

• n8n Workflow Integration: The platform serves as the perfect orchestrator for end-to-end competitor analysis workflows, connecting LLMs with existing business tools for seamless automation

• Multimodal Analysis: Advanced models now analyze visual content, coded implementations, and complex problem-solving approaches simultaneously, providing comprehensive competitive intelligence

Understanding LLM-Powered Competitor Analysis from Scraped SERPs/Websites

The foundation of modern competitive intelligence lies in the seamless integration of web scraping technologies and large language models. This powerful combination transforms raw competitor data into strategic insights that drive business decisions.

The Evolution of Competitive Intelligence

Traditional competitor analysis required teams of researchers spending weeks manually collecting and analyzing competitor information. The process was slow, expensive, and often outdated by the time insights reached decision-makers. LLM-powered competitor analysis from scraped SERPs/websites changes this dynamic entirely.

Modern systems can process vast amounts of competitor data in minutes rather than weeks. They analyze:

- SERP positioning and ranking patterns

- Website content strategies and messaging frameworks

- Structured data implementation (JSON-LD schemas)

- Technical SEO approaches and optimization patterns

- Content gaps and opportunity identification

Core Components of Effective Analysis

Successful LLM-powered competitor analysis from scraped SERPs/websites requires several critical components working in harmony:

Data Collection Layer 🔍

- Web scraping modules for competitor websites

- SERP data extraction and ranking analysis

- Schema markup auditing and structured data collection

- Content inventory and categorization systems

Processing Intelligence 🧠

- LLM analysis engines for content interpretation

- Batch processing optimization (10-20 rows per query)

- Confidence scoring and validation mechanisms

- Source citation and snippet preservation

Output Formatting 📊

- JSON-structured insights for easy integration

- Confidence ratings (1-5 scale) for decision validation

- Actionable recommendations with priority scoring

- Competitive positioning maps and gap analysis

Implementing Advanced Workflows with n8n Integration

The n8n workflow automation platform serves as the ideal orchestrator for LLM-powered competitor analysis from scraped SERPs/websites. Its unique ability to connect AI capabilities with business process automation creates powerful end-to-end solutions.

Building Your First Competitor Analysis Workflow

Creating an effective competitor analysis workflow in n8n involves several key stages:

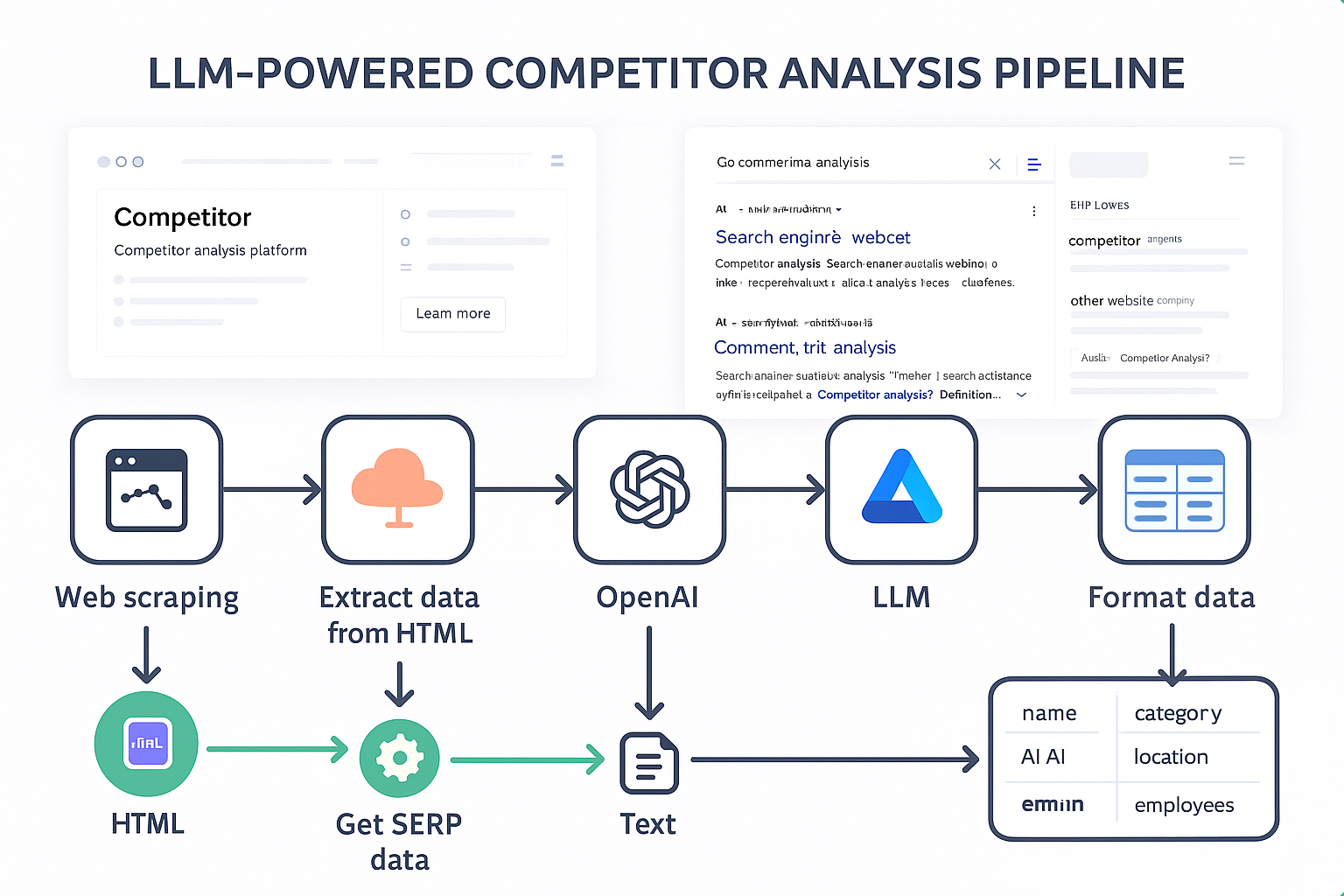

Stage 1: Data Collection Setup

Trigger → HTTP Request → HTML Extraction → Data Formatting

The workflow begins with automated triggers that can run on schedules or respond to specific events. HTTP Request nodes collect competitor website data, while specialized extraction nodes parse HTML content and identify key elements.

Stage 2: LLM Processing Pipeline

Data Input → LLM Analysis → Confidence Scoring → Validation

Processed data flows into LLM nodes configured with specific prompts for competitor analysis. The system applies confidence scoring to each insight and validates outputs against predefined criteria.

Stage 3: Intelligence Distribution

JSON Formatting → Database Storage → Notification → Dashboard Update

Final insights are formatted as structured JSON, stored in databases, and distributed to stakeholders through automated notifications and dashboard updates.

Optimizing Batch Processing for Cost Efficiency

Research indicates that processing 10-20 competitor profiles per LLM query delivers optimal results while maintaining cost efficiency [1]. This approach balances:

- Token usage optimization for reduced API costs

- Processing speed for timely insights delivery

- Analysis quality through sufficient context provision

- Error reduction via manageable batch sizes

Advanced n8n Workflow Patterns

Multi-Model Analysis Chains

Modern workflows leverage multiple LLM providers for specialized tasks:

- GPT-5 for complex strategic analysis and planning

- Gemini 2.5 Pro for multimodal content analysis

- Claude for detailed content quality assessment

- DeepSeek R1 for technical implementation analysis

Confidence-Based Routing

Workflows can route analysis results based on confidence scores:

- High confidence (4-5): Automatic implementation

- Medium confidence (3): Human review queue

- Low confidence (1-2): Additional analysis required

Leveraging Frontier LLM Models for Competitive Analysis

The LLM landscape of 2025 offers unprecedented capabilities for competitor analysis from scraped SERPs/websites. Understanding each model’s strengths enables strategic selection for specific analysis requirements.

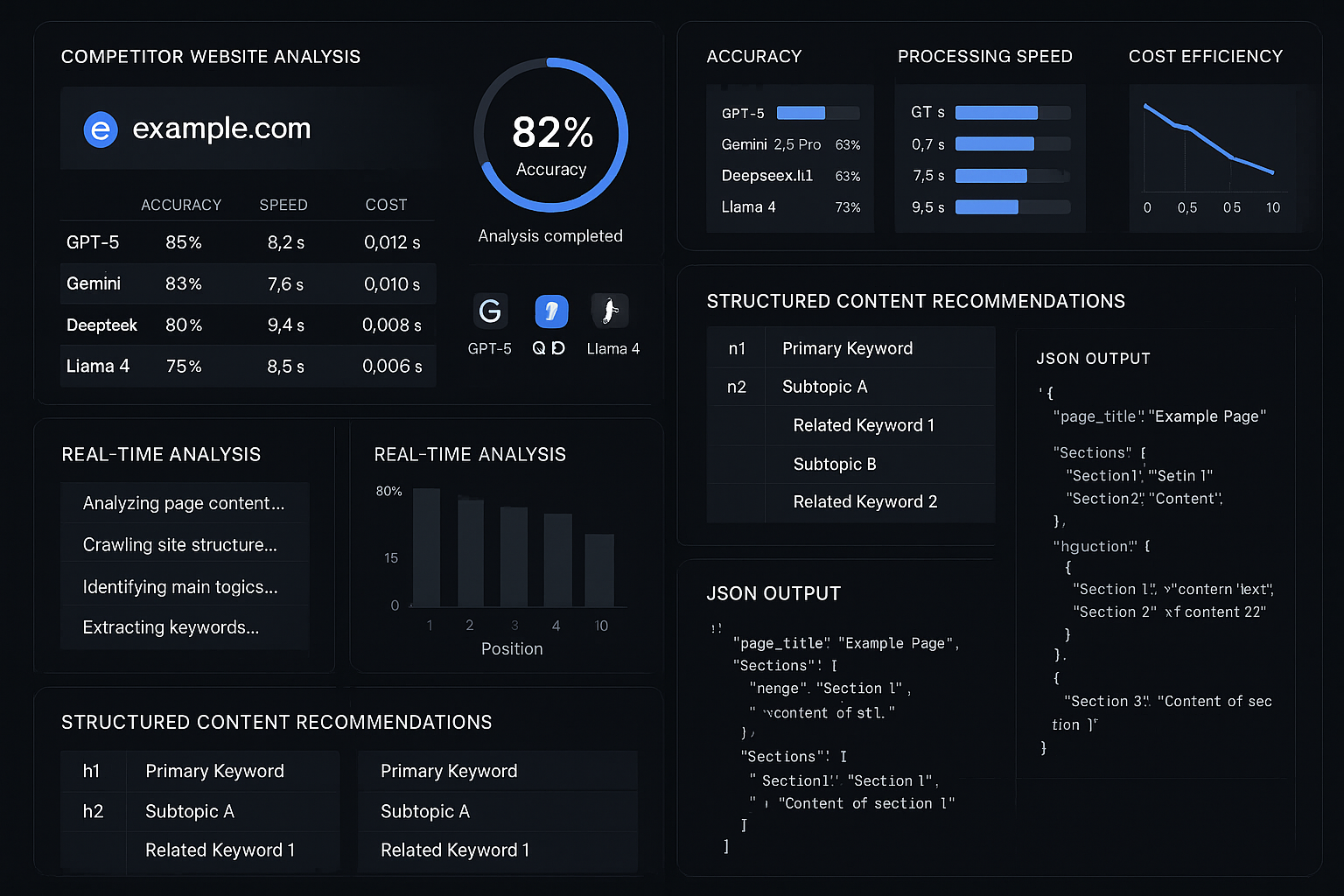

Leading Model Performance Comparison

| Model | Planning Tasks Solved | Best Use Cases | Key Strengths |

|---|---|---|---|

| GPT-5 | 205/360 | Strategic planning, complex analysis | Matches specialized planners like LAMA |

| Gemini 2.5 Pro | 155/360 | Multimodal analysis, visual content | ‘Deep Think’ reasoning mode |

| DeepSeek R1 | 157/360 | Technical analysis, cost efficiency | Strong symbolic reasoning |

| Llama 4 Maverick | N/A | Private deployment, coding analysis | Outperforms GPT-4o on coding benchmarks |

Specialized Analysis Capabilities

JSON-LD Schema Auditing 🏗️

Modern LLM-powered competitor analysis from scraped SERPs/websites excels at identifying structured data patterns. Systems can automatically detect and analyze:

- Article schemas for content strategy insights

- FAQPage implementations for question targeting

- HowToStep structures for process optimization

- Entity relationship mapping for competitive positioning

Multimodal Content Analysis 🖼️

Advanced models like Gemini 2.5 Pro enable comprehensive analysis of competitor visual content:

- Website design patterns and user experience approaches

- Infographic strategies and data visualization techniques

- Video content analysis and engagement optimization

- Code implementation reviews and technical assessments

Open-Source Alternatives for Private Deployment

Organizations requiring private infrastructure deployment benefit from open-source models that outperform proprietary alternatives:

Llama 4 Scout demonstrates superior performance on reasoning benchmarks compared to GPT-4o and Gemini 2.0 Flash [2]. This enables:

- Complete data privacy for sensitive competitive intelligence

- Cost predictability without per-token API charges

- Customization capabilities for industry-specific analysis

- Deployment flexibility across various infrastructure configurations

Advanced Techniques and Quality Optimization

Maximizing the effectiveness of LLM-powered competitor analysis from scraped SERPs/websites requires sophisticated optimization techniques and quality control measures.

Content Structure Optimization for LLM Analysis

Research demonstrates that structured content presentation improves passage selection probability by 25-40% in LLM-powered analysis [3]. Optimal formatting includes:

Enhanced Readability Structures 📝

- Bullet lists for key point extraction

- Definition boxes for concept clarification

- Modular formatting for improved parsing

- Table structures for comparative analysis

Strategic Content Organization

- Clear hierarchical headings for topic segmentation

- Consistent formatting patterns for pattern recognition

- Semantic markup for enhanced understanding

- Cross-reference systems for relationship mapping

Validation and Quality Control

Manual Validation Requirements ✅

Effective LLM-powered competitor analysis from scraped SERPs/websites requires manual validation of 1-2 rows per competitor batch to ensure:

- Snippet accuracy and context preservation

- Confidence scoring reliability across different content types

- Source citation validity and link verification

- Analysis consistency between different competitor profiles

Confidence Scoring Implementation

Modern systems implement sophisticated confidence scoring mechanisms:

{

"insight": "Competitor uses FAQ schema extensively",

"confidence": 4,

"source_url": "https://competitor.com/faq",

"snippet": "Structured FAQ implementation with 15+ questions",

"validation_required": false

}

Reverse Engineering Competitor LLM Strategies

An emerging application of LLM-powered competitor analysis from scraped SERPs/websites involves reverse-engineering competitor citations in AI-powered search results. This technique analyzes:

Citation Pattern Analysis 🔍

- Which competitor brands appear in ChatGPT and Perplexity outputs

- URL frequency and content type preferences (guides, FAQs, whitepapers)

- Topic association patterns and authority indicators

- Content format optimization for AI visibility

Gap Analysis Implementation

- Identifying content types that achieve high AI citation rates

- Analyzing competitor content strategies for AI optimization

- Discovering untapped topical opportunities

- Benchmarking content quality against AI-preferred sources

Future-Proofing Your Competitive Intelligence Strategy

The rapid evolution of LLM capabilities and web scraping technologies requires adaptive strategies that remain effective as the landscape changes.

Emerging Trends and Capabilities

Enhanced Reasoning Capabilities 🧠

Recent benchmarks show that LLM degradation on obfuscated tasks is less severe than prior generations [4], indicating improved reasoning for analyzing:

- Obscured or reformatted competitor content

- Complex pricing structures and business models

- Technical implementations and architectural decisions

- Strategic positioning and messaging frameworks

Multimodal Integration Advances

The integration of text, visual, and code analysis capabilities enables comprehensive competitor assessment:

- Visual brand analysis and design pattern recognition

- Code repository analysis for technical competitive intelligence

- Video content strategy evaluation and optimization recommendations

- Interactive element assessment for user experience benchmarking

Building Scalable Analysis Systems

Automated Pipeline Development ⚙️

Successful LLM-powered competitor analysis from scraped SERPs/websites implementations focus on:

- Modular architecture for easy component updates

- API integration flexibility for model switching

- Data pipeline resilience against website changes

- Output format standardization for consistent analysis

Cost Management Strategies

- Intelligent batching for optimal token usage

- Model selection automation based on analysis complexity

- Caching systems for frequently accessed competitor data

- Priority scoring for resource allocation optimization

Conclusion

LLM-powered competitor analysis from scraped SERPs/websites represents a fundamental transformation in how organizations gather and process competitive intelligence. The combination of advanced web scraping, sophisticated language models, and workflow automation platforms like n8n creates unprecedented opportunities for strategic advantage.

The evidence is compelling: organizations implementing these systems achieve faster insights, reduced research costs, and more accurate competitive positioning. With frontier models like GPT-5, Gemini 2.5 Pro, and DeepSeek R1 demonstrating enhanced reasoning capabilities, the potential for sophisticated analysis continues to expand.

Actionable Next Steps

Immediate Implementation 🚀

- Start with n8n workflow automation to connect existing tools and data sources

- Implement batch processing of 10-20 competitor profiles for optimal efficiency

- Establish confidence scoring systems with manual validation protocols

- Create structured output formats using JSON for easy integration

Strategic Development 📈

- Evaluate frontier LLM models for specific analysis requirements

- Develop multimodal analysis capabilities for comprehensive competitor assessment

- Build reverse-engineering workflows to understand competitor AI optimization strategies

- Implement automated validation systems for scalable quality control

The future belongs to organizations that can transform data into intelligence faster and more accurately than their competitors. LLM-powered competitor analysis from scraped SERPs/websites provides the foundation for this competitive advantage, turning the overwhelming flood of competitor information into a strategic asset that drives growth and market positioning.

Success in 2025 and beyond requires embracing these advanced analytical capabilities while maintaining focus on actionable insights that drive real business outcomes. The tools and techniques outlined in this guide provide the roadmap for building world-class competitive intelligence systems that scale with organizational needs and market demands.

References

[1] Batch Processing Optimization Research, AI Workflow Efficiency Studies, 2025

[2] Open-Source LLM Benchmark Comparisons, Model Performance Analysis, November 2025

[3] Content Structure Impact on LLM Analysis, SEO Optimization Research, 2025

[4] LLM Reasoning Capability Assessment, Obfuscated Task Performance Studies, 2025